When Traditional Forecasting Falls Short: A Practical Path to Context-Aware Predictions

Becks Simpson

Healthcare forecasting doesn't follow a playbook. One measure might respond to targeted interventions with sudden jumps, while another shows gradual improvements tied to long-term care management. Predicting these requires understanding the context that drives them, not just the historical numbers. We ran into this when building forecasting for healthcare quality measures. Traditional approaches looked solid on paper but fell apart against real-world complexity.

The Traditional Forecasting Dead End

The initial approach seemed straightforward: use ARIMA models in Python for time series forecasting. These statistical models work well for many applications, but healthcare measures introduced complications. Each model needed hyperparameter tuning. The deployment infrastructure required significant engineering work. Most critically, getting these models into production would have consumed resources better spent elsewhere.

We pivoted to Snowflake's time series models, which handle many forecasting best practices out of the box. The models performed reasonably well by standard metrics—MAE, RMSE, and MAPE were acceptable. But when subject matter experts (SMEs) reviewed the predictions, something was off. The forecasts didn't align with their understanding of how these measures actually behave.

This disconnect revealed the core problem: statistical models learn patterns from historical data alone. They don't have a way to incorporate domain knowledge about seasonal scheduling patterns, intervention timing, or operational constraints that SMEs know matter but can't see in the numbers.

Context Changes Everything

The solution turned out to be simpler than expected. Instead of more sophisticated statistical modeling, we turned to large language models designed for advanced reasoning. Using Gemini with templated prompts, we built a context-aware forecaster that combines historical data with explicit domain knowledge.

The approach works like this: for each prediction, the model receives the numerical history plus a brief describing relevant context—things like "annual screening campaigns typically drive a 15% increase in Q4" or "medication adherence start strong but fall off towards the end of the year" or “the plan engaged a vendor to drive compliance for eye disease screening which will correspond to a 5-10% score increase.” The model reconciles what the data shows with what we know about how these measures actually move and external factors affecting them at the moment of prediction.

SMEs immediately preferred these forecasts. The predictions both matched historical patterns—and reflected the intricate rules and expectations around how scores actually change. When a forecast showed a jump in October, it wasn't because the algorithm spotted a pattern; it was because the model understood that's when the intervention happens.

This aligns with broader patterns we see in applied AI. The most sophisticated solution usually matches how the domain actually works, not necessarily which algorithm is most complex.

When to Use Context-Aware Forecasting

This approach excels when:

- Domain knowledge significantly impacts outcomes but doesn't always show up in the data

- Subject matter experts can articulate rules, constraints, and drivers

- Historical data is limited or contains explainable anomalies

- Interpretability matters—stakeholders need to understand why a prediction was made

It's less appropriate when:

- You have abundant historical data with stable patterns

- External factors are either negligible or already captured in the data

- Cost and latency are critical constraints

Context-aware LLM forecasting can fail more dramatically than traditional statistical models—predictions might overshoot by large margins so adding guardrails and constraints is important. Additionally, using some approach like ensembling can help. Combining a solid statistical baseline with the context-aware forecast to limit the downside while capturing the upside from domain knowledge.

Summary

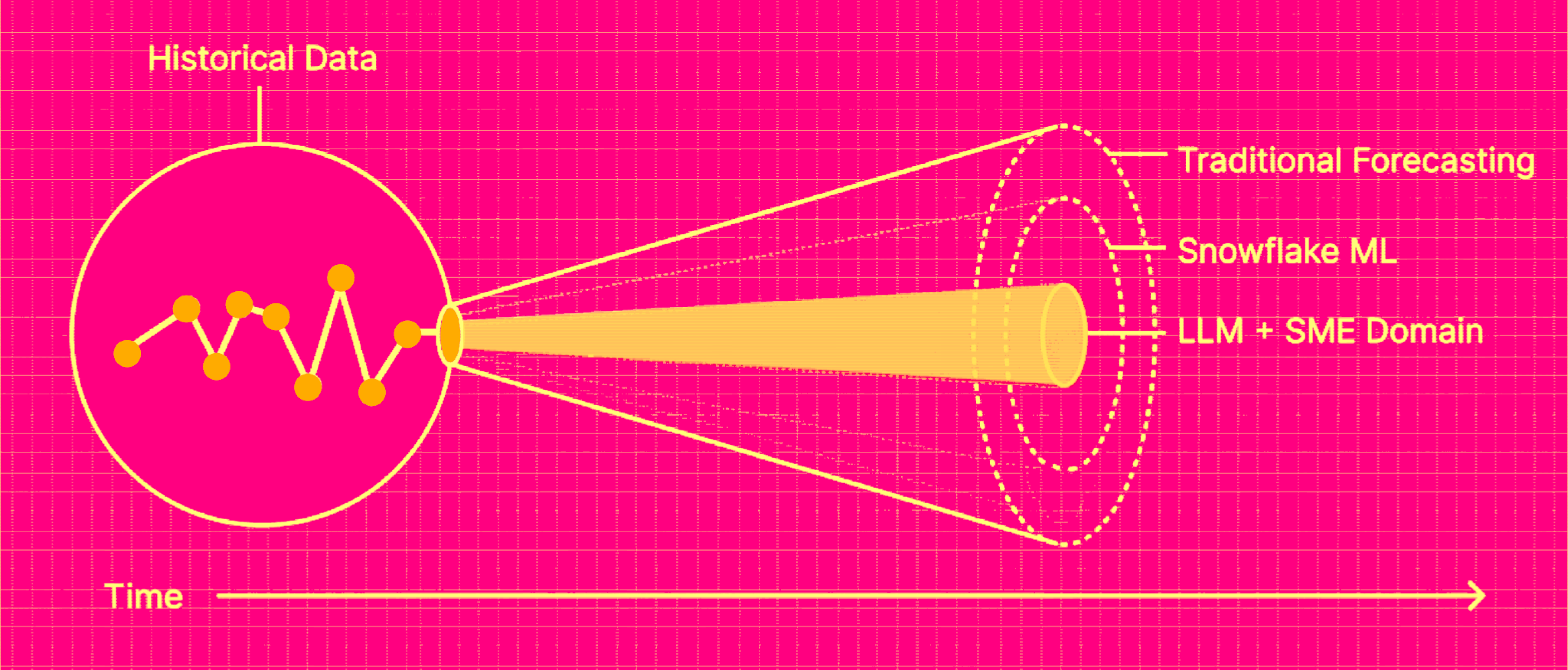

Moving from ARIMA to Snowflake models to LLM-based forecasting wasn't a linear progression toward "better" methods. Each approach has tradeoffs. Traditional statistical models offer proven reliability and interpretability of their mathematical basis. LLM-based forecasting offers the ability to encode complex domain logic that data alone can't capture.

The key insight: when forecasting involves clear causal relationships and describable rules—not just historical patterns—context-aware approaches can bridge the gap between what the data shows and what domain experts know to be true.

For organizations considering this approach, start with domains where SMEs have strong intuitions about what drives outcomes. Build templates that systematically capture that knowledge. And be honest about measurement constraints—sometimes "trusted by the people who use it" is the validation metric that actually matters, even if it's not the one we'd prefer to report.

Interested in learning how Toboggan Labs can help your organization navigate similar AI implementation challenges? Contact us to discuss your specific use case.